Hello,

I've been struggling to get a working cluster of 2 firefly perimeter firewalls on a single ESXi 5.1. At first I was trying to use 5.5 as it is mentioned as supported, but as I was unable I tried to use 5.1 because there were a lot of web sites saying it was not possible to do it.

I've tried to follow the instructions provided by juniper here and here, but I must be missing something, and it is driving me crazy!!

Basically what I have done is:

- Create two new vSwtiches, one for HA control data, and another one of the HA fabric link. The first interface I'd keep it in the defailt vswitch, connected to the only NIC in the ESXi.

- Deploy two times the ovf template file provided by juniper for evaluation: junos-vsrx-12.1X47-D10.4-domestic.ova

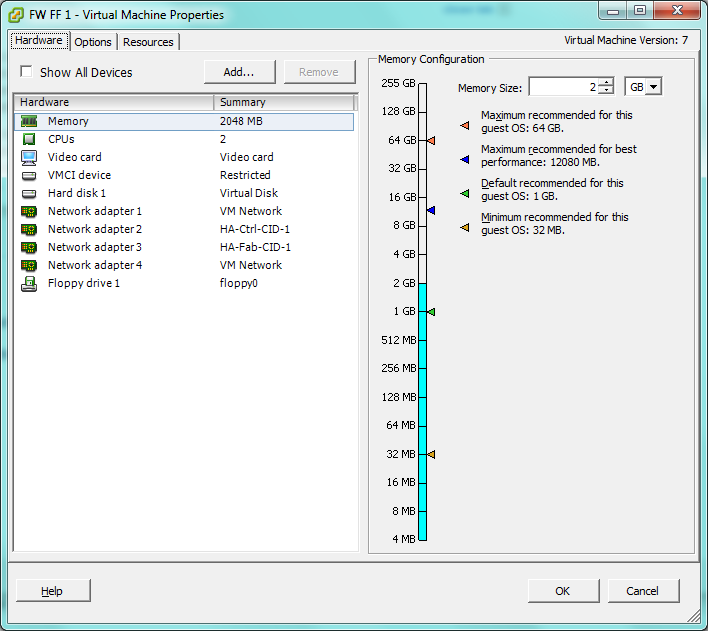

- Add two new interfaces on each VM, to a total of four. First one for the HA mgmt link, second for HA ctrl link, third one for HA fabric link and the fourth one for a regular data link. All vSwitches MTU have been turned to 9000 (Still don't know well why) It can be seen in this image:

- Then I boot up both VMs, and deploy the configuration recommended by Juniper on both (I've tried also to do it just in one of the two, expecting that the cluster does its job, but it didn't work):

set groups node0 interfaces fxp0 unit 0 family inet address 192.168.42.81/24set groups node0 system hostname fireflyperimeter-node0set groups node1 interfaces fxp0 unit 0 family inet address 192.168.42.82/24set groups node1 system hostname fireflyperimeter-node1set apply-groups "${node}"

set system root-authentication plain-text-password

New password: password

Retype new password: password set system services ssh

- In this moment I commit the config and assume both units are ready to join the cluster, so I try to set it up:

· In node 0:

set chassis cluster cluster-id 1 node 0 reboot

· In node 1:

set chassis cluster cluster-id 1 node 1 reboot

Then, obviously, both nodes get rebooted, but when it comes back, both nodes end up being primary after a brief time of hold. In both nodes, the result of the show chassis cluster status is exactly the same:

Also, the chassisd log apparently doesn't show anything but that it can't connect:

Any idea of what I can be possibly missing would be much appreciated, it is becoming frustrating!

Thanks and regards.

#ESXi#cluster#VM#Firefly#ESX