Getting to Know the Packet Forwarding Engine:

An Informal Guide to the Engines of Packet Forwarding

By: Salah M. S. Buraiky

This article aims at providing the essential concepts for a beginner network engineer to understand the function, basic workings, components and features of the Packet Forwarding Engine (PFE), the intelligent component of the forwarding plane (data plane). It aims at creating a model of the PFE in the mind, leading to an appreciation of its criticality and its central role in a router's performance and features. It assumes that the reader knows the basics of networking and routing protocols. It will not furnish you with enough hardware knowledge to be dangerous, only brave.

This article is highly influenced by the predominance of Ethernet and Multi-Protocol Label Switching (MPLS) in today's networks and my familiarity with Juniper Networks, but the fundamental concepts are the same for all Packet

Forwarding Engines. This article is closer to a snorkel than a deep dive.

PART ONE: SYNOPTIC VIEW

I. The Making of a Routing Machine (which is also a … Forwarding Machine)

A router is a packet switching device. It is a node in the topology that makes a data network. A router gets a packet on an inbound interface (the ingress interface), looks at its header, where the destination address is, and determines, based on that, the outgoing interface (the egress interface). Routing initially had a dual meaning: sometimes it was used to mean the computation of network paths, while in others it was used to refer to the actual packet movement from an ingress (input) interface to an egress (output) interface. The second meaning is more commonly referred to nowadays as "forwarding".

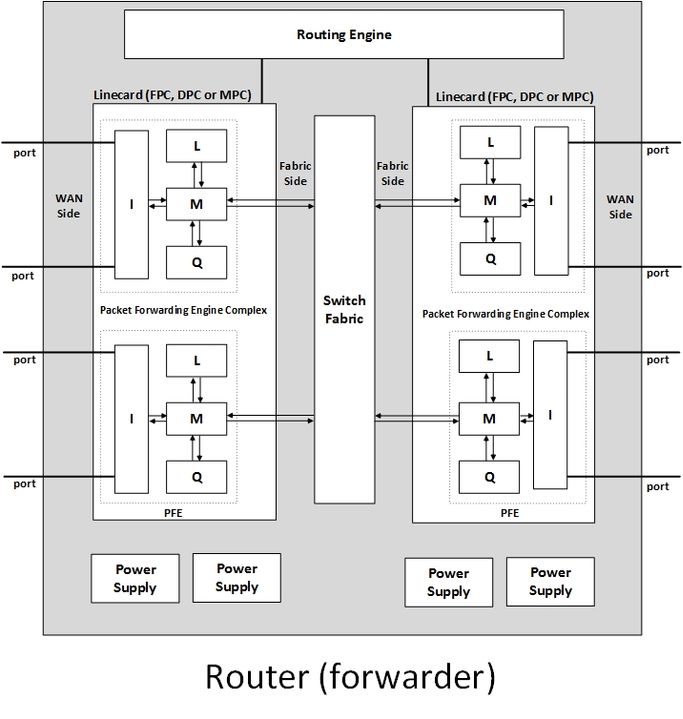

A router, thus, may also be called a "forwarder". Some may even argue that that’s a more apt name given that forwarding is a router’s ultimate goal and that most of the cost and power consumption of a router are due to the forwarding components. In addition to a chassis and power supplies (see the diagram), a router consists of a Routing Engine (RE), that embodies the control plane (also called the routing plane), and a set of linecards interconnected by a switch fabric, that together represent the forwarding plane (also called the data plane). Each linecard hosts the network ports (router interfaces) that send and receive traffic, to and from links, and one or more ASIC (Application Specific Integrated Circuit) chips or chipsets (chip complexes), each called a Packet Forwarding Engine (PFE).

The forwarding intelligence, the ability to parse and understand packet headers, lies in the PFE. This article is about the PFE, which is the pivotal component of the forwarding plane (which we’ll talk about in the coming section), but remember that the forwarding plane, strictly speaking, consists, in addition to the PFE, of the network ports (hosted on linecards along with the PFEs) and the fabric.

The next section will elaborate on the two functional dimensions of a router, namely the routing plane and the forwarding plane.

II. Plain Talk about Planes: The Dichotomy of Control Plane and Forwarding Plane

A. Defining the Planes

A router consists, functionally, of two planes:

1- The Control Plane: The function of the control plane is to discover the network's topology and compute loop-free, optimal routes. It is where routing protocols, such as OSPF, ISIS and BGP, and signaling protocols, such as RSVP and LDP, run and where the routing tables (also called Routing Information Bases or RIBs), including multicast reverse path checking tables and VRF tables, are instantiated and populated. It is where the kernel reins and daemons live. The control plane also provides an interface for configuring and monitoring the router (configuration and monitoring are considered by some to be a separate plane called the Management Plane, but we’ll stick to the dichotomy in this essay).

The control plane, usually implemented on a Routing Engine (RE), which is also known as a Supervisory Engine, a Route Processor, among other names, is based on an operating system, called a Network Operating System (NOS), such as Junos, running on a general propose processor because the computational and memory resources it requires are of such a complexity that only a software implementation is feasible. The control plane is the router's brain and its computational element.

2- The Forwarding Plane: The function of the forwarding plane is to transfer packets from an ingress interface (port) to an egress interface (port) so as to move each packet a hop closer to its ultimate destination. Bsy traversing a chain of forwarding plane instances, each contained within a router, a packet completes its voyage from source to destination. Unlike the control plane, which only looks at control (such as OSPF Link State Updates and RSVP-TE PATH and RESV messages) and management packets (such as SNMP messages), each and every packet arriving at the router is processed by the forwarding plane. The forwarding plane is a router’s muscles and its communication element.

B. Routing Time versus Forwarding Time

A proper understanding of the networking dynamics calls for establishing a clear distinction between what is relevant to routing time (also called convergence time) versus what is relevant to forwarding time. To begin with, mapping the topology and computing loopless paths is clearly the routing plane’s duty. The forwarding plane gets the routes from the control plane and has to trust them. Lacking the global topological view, it has no way to decide on their looplessness or optimality. While, routing time is a time for evaluation and reflection, forwarding time is a time for pure and immediate action.

On the other hand, when (equal cost) multiple paths (next hops) exist (i.e. when Equal Cost Multi Path or ECMP is present), even though the routing plane identifies them and pushes them to the forwarding plane, the routing plane doesn’t decide on the specific next hop taken by each particular flow of packets. This load balancing decision is taken at forwarding time by the forwarding plane.

When an action needs to be taken near instantly, it is to be taken at the forwarding plane. Local protection mechanisms (whether based on RSVP-TE, IGP LFA or SR) depend on installing backup paths in the forwarding plane so that they may be immediately enacted without waiting for the lengthy traditional IGP convergence that takes place in the control plane.

C. The Planes in Perspective

The forwarding plane is the reason a router is built. All else is made to that end. The control plane's function, in addition to providing an interface to manage the router, is to program the forwarding plane with the information required to do its job in the form of a table mapping network destinations to egress interfaces, a table known as the Forwarding Information Base (FIB), or the forwarding table. Routing is distributed; forwarding is local.

At the dawn of routing technology, the control plane and the forwarding plane used to share resources. In this day and age, separating the two is a design tenet. The separation ensures that the forwarding of packets is not impacted by surges of control activity in the control plane and to even continue during brief periods of control plane instability or unavailability. Even after the separation of the two planes, pioneered by Juniper Networks at the end of the 1990s, they remained tightly coupled via a proprietary, platform-specific interface, that is, until the advent of Software Defined Networks (SDN).

With the SDN approach to networking, the control plane doesn’t have to be bundled with the forwarding plane in a single box. The control plane may live far away and command the forwarding plane over the network. In such a context, the control plane element is called a “controller” and may centrally preside over all forwarding machines in the network, using a variety of new “SDN” protocols (such as OpenFlow or Path Computing Element Protocol, PCEP).

With such an arrangement, the controller can provide operators with an abstract holistic view of the network and enable its programming via an interface called a Northbound Interface. The controller could leverage its global, complete view of the network to provide optimization and agile provisioning. The SDN evolution is virtually all about control plane programmability. Since SDN is essentially a control plane concept, that’ll be the last word we say about it in this discourse.

PART TWO: ABSTRACT VIEW

I. Introducing the PFE

The PFE is the centerpiece of the forwarding plane. It is implemented typically as an ASIC chip or a chipset residing on a linecard. It could also be implemented as a piece of code as in virtualized platforms such as Juniper Networks vMX (Virtual MX), but our focus in this article will be entirely on the hardware PFE.

The PFE is the component that “understands” packets (can decode their headers). In essence, as we will see, the PFE is a header processing and forwarding lookup engine. The PFE houses the FIB (forwarding table) mentioned earlier and uses it, upon inspecting the packet’s header, to determine to which egress port the packet is to be sent. Each entry in the FIB is a masked prefix (a network address coupled with a string of bits that indicate which bits of the address are the network part).

Even though multiple entries can match the destination address in a packet, the most specific match must be chosen. Seeking the best matching entry (the longest, the most specific) in the FIB is called a “route lookup” (even though it is actually a forwarding lookup!). The process of seeking the most specific match is known as the Longest Prefix Match (LPM), a process whose sanctity remained unchallenged since the net’s infancy (in case of ECMP, as highlighted earlier, PFE will select one of the outgoing interfaces).

The PFE receives a packet, places it in a temporary memory block called a “buffer”, inspects its destination address, looks for an LPM match for the destination in the FIB (forwarding table) and determines accordingly the next hop and the outgoing interface. It then does some processing to the packet’s header and sends it on its way. The side that connects the PFE to the network ports, is called its “WAN Side”, while the side that connects the PFE to the fabric is called its “Fabric Side” (see the diagram).

The above description is a bit oversimplified, as the packet actually arrives encapsulated in a frame (an Ethernet frame most commonly) with layer 2 headers and trailers. Upon entering the PFE, these must be error-checked and stripped away before the packet is processed. Before leaving the router, a layer 2 header and a trailer are also slapped on the packet.

From the description above, it is clear that the PFE must contain a buffer memory to hold packets, must have a memory element for holding the FIB and must have a lookup module that maps the destination address of the packet to a next hop or a bunch of next hops in the case of load balancing over equal cost multipath (ECMP). It must also do any associated header processing (like checksum and TTL adjustments as we’ll see later in a Walk To Remember). The next section will explain the PFE functional blocks that achieve each of these actions.

II. PFE Architecture

In this section we’ll provide an overview of the components of a typical modern PFE (see the diagram).

1. Lookup (L): The Heart of the PFE

Just as the PFE is the soul of the forwarding plane, the soul of the PFE is the routing Lookup Block, known also as the Route Block, L Block, R Block, LU Block or a host of other names normally hinting at its function (could be XL for eXtended Lookup, or SL for Super Lookup, who knows!). Some older Juniper Networks platforms called it an Internet Processor (IP). The Lookup Block hosts the FIB, the real FIB, the FIB that actually forwards. The FIB constructed in the RE is a copy that is in the router's brain. It gets downloaded to the PFE to be actionable. A FIB entry that doesn’t find its way from the RE to the PFE, is as good as non-existent.

The FIB is a table in that it hosts a list of data. In implementation, it is usually a tree-like structure, called a “trie” (coming from the word retrieval and pronounced tree) stored in a fast Dynamic RAM variant (such as Reduced Latency RAM or RLDRAM). In the Junos world, this tree is called a jtree (or its successor the ktree). A jtree is a binary tree that is used for storing prefixes in a manner that makes searching for a longest match faster and more efficient. Whenever, a set of prefixes are to be matched against, think of them as organized in a jtree. A hash table is not an option because of the LPM requirement, which is essentially a best match search rather than a simple indexing operation.

Employing a trie on RLDRAM is not the highest performance option but the most scalable one given the huge routing tables of our times (hundreds of thousands of entries or even a couple of millions). Ternary Content Addressable Memory (TCAM) is much faster in doing LPM but is complex, has a high power consumption and takes up a large area on the chip. TCAM is a specialty memory type that is tailor designed for near instant lookups. A TCAM is hardware-designed so that inputting a destination address will, in a flash, return the corresponding next hop information without searching or iterations (see the Appendix for more about memory types).

Another essential function of the Lookup Block, is identifying the logical interface (called an ifl or a unit by Junos and a sub-interface by other Network Operating Systems) the packet arrived on, for modern routers pretend that each packet arrives not on the physical interface but on a virtual interface contained within it. The determination of an ifl is usually based on a demultiplexing field within the packet, such as a VLAN ID. Each of these ifls is treated as a full-fledged interface in that it gets an IP address and is associated with services such as firewall filters (access lists or ACLs), policers and classifiers, which we’ll talk about later.

2. Memory (M) Block: the PFE’s Workbench

The Memory Block is the PFE’s linchpin and its guesthouse. It is a buffer that hosts packets arriving to the PFE from the WAN Side. It is usually the PFE block to which all other blocks are connected. Commonly, it is implemented using a fast memory type called Static RAM (SRAM). It is called the Buffer Block, Memory block, B Block, M Block, XM Block or MQ (as it can do some basic, port level queueing) or other names alluding to its function. The Memory Block queues packets and manages their dequeuing into the fabric or out to the network ports. It extracts the packet’s header and feeds it to the Lookup Block to determine where the packet should be sent.

Another function usually done by the Buffer (Memory) Block is “cellification”. In what follows we’ll explain what that is, the motivation for it and how it is done. Switching hardware can be better optimized when the data units are of fixed size. Therefore, packets, which are of variable length, are divided into short, fixed-sized pieces called cells (or J-Cells in a Juniper Networks router). This cellification is conducted by the PFE (typically by the Memory Block) before the route lookup is performed and before the packet is sent over the fabric (or towards other WAN interfaces in same PFE).

The first J-Cell, the one that contains the header and determines the packet's forwarding destiny, is called a Notification Cell (NC). The rest are called Data Cells (DC). This cellification happens as soon as the packet is received and initial layer 2 processing is completed.

Only the Notification Cell is read into the Lookup (Route) Block (the Notification Cell is sometimes called the packet’s HEAD). The Data Cells (constituting the packet’s TAIL) wait in a buffer for the Notification Cell to be processed and the next hop to be determined.

After that, the Data Cells stream through the PFE or through the PFE and fabric to the outbound interface (undergoing, remember, a second lookup, if that interface is on a different PFE). They are reassembled into a packet before they leave the egress PFE.

In some designs, interfaces are not connected directly to the Buffer Block but are connected to an Interface Block (called I Block or XI Block or something like that) that sits between interfaces and the Memory Block (see the diagram). In such cases, functions usually performed by the Buffer Block such as queueing (and handling oversubscription) are delegated to the Interface Block. In some PFE designs, there is a Fabric Block (called F Block, XF Block or a variation thereof) that serves as an mediator between the Memory Block and the Fabric.

3. Queuing (Q) Block: A Concern for Priority

Basic Queueing, which determines the order of servicing packets and the priority and resources (such as bandwidth and buffer space) allocated to each packet, is handled by the Memory Block, as mentioned above (and for that it is sometimes called MQ). Some applications require more granular queueing to deal with multiple subscribers served by a single port. Such is the case when a router is used as a Broadband Remote Access Server (BRAS) or a Broadband Network Gateway (BNG) to terminate last mile connections to Internet residential subscribers (such as DSL or FTTH users). In such applications, queueing calls for an additional block called the Queueing Block, which provides multi-level hierarchical Class of Service and queueing. Juniper Networks calls such queueing Hierarchical Class of Service (HCoS) or Dense Queuing (DQ).

III. A Higher Resolution Look: A Fuller Forwarding Picture (Keys, Services and Labels)

From the above, we have seen that the forwarding lookup is the core function of the PFE and its historical first. This is complemented by some layer 2 processing, which usually involves associating the packet with an ifl (a logical interface, a unit or a sub-interface). The lookup includes a MAC (Media Access Control) address lookup, which identifies the MAC address of the next hop. Sometimes it includes a label lookup as well (for MPLS traffic).

As technology evolved, more was demanded from the PFE. To enable more flexibility and granularity in traffic engineering, forwarding nowadays is not confined to destination-based forwarding. The forwarding of a packet by the PFE can take into account other packet fields such as the source address, the value of the TOS (Type of Service) Byte and the UDP and TCP port numbers. Forwarding based on such fields is called by Junos Filter-based Forwarding (FBF) and by other platforms Policy-based Routing (PBR) among other names. With FBF, the FIB will contain a mapping between, not only destination addresses, but also other packet fields (called Keys) and next hops.

In addition to the inclusion of more packet fields in the forwarding decision process, labels were introduced into the packet switching world by the advent of MPLS (Multi-Protocol Label Switching). With the advent of MPLS, the FIB became a place for storing label forwarding entries as well as prefixes.

When a labeled packet arrives at the PFE’s shore, its upper (outermost) label is inspected and a matching entry is sought in the FIB using a hash table. The matching entry will indicate the next hop. The next hop will specify the outbound interface, the MAC address of the interface of the next router along the path in addition to a label operation and a label value if the operation is a swap or push. In most transit routers, this operation is a swap operation, but could also be a pop, a push or a combination of operations, depending on the router’s location within the topology and the services (such as local protection or fast reroute) it is offering.

In addition to Policy-based routing and MPLS, new forwarding functions, called forwarding services (or simply services), were incorporated into the PFE to achieve various performance, security and monitoring objectives. With the introduction of services demand from the PFE became more than the relatively simple lookup and forward sequence. Services are additional functions, that are mostly handled by the Lookup Block, that either manipulate the packet’s header, the entire packet or determine whether the packet is to be forwarded or not, how fast the packet is to be forwarded and how much resources are allocated to servicing the packet such as bandwidth and buffer space.

Some services, such as multicast and sampling don’t change packets. Some, like Network Address Translation (NAT), manipulate addressing and port fields located in the header. Some services such as IPSec encryption, radically change packets and their headers. Services required to offer Class of Service (CoS) include classification, policing, filtering, scheduling (forwarding prioritization and bandwidth allocation), shaping and marking (coloring).

Encapsulation and decapsulation, needed for tunneling (such as GRE tunneling, IPSec encapsulation or the multicast-in-unicast tunneling required as part of PIM-SM operation) are also additional services required from the PFE. In the past, many of these services, such as NAT, tunneling, sampling and flow export (jflow), necessitated the use of special linecard or module called (in Juniper Network’s world) a Multi-Service Module (such as the MS-DPC linecard and the MS-MIC module). Nowadays, the lookup block of the PFE is capable of doing most of these services (service are called “inline”, when done by the Lookup Block in the PFE rather than a dedicated hardware module).

All in all, the PFE, now that we have a more complete picture, is an engine that does route, flow, MAC and label lookups in addition to classification, scheduling (queueing and dequeuing), policing, filtering, accounting, sampling, mirroring, unicast and multicast reverse path checking, class-based routing, packet header re-writes, coloring (marking), encryption, decryption, encapsulation, decapsulation and more (recently, the latest PFEs can do telemetry and even participate in the generation of packets for bi-directional forwarding detection (BFD), a lightweight liveness protocol for rapid link failure detection).

IV. A Walk to Remember (WTR): A Packet's Journey across the Plains (Planes!)

In moving from router to router, a packet may undergo a series of radical conversions. It may fly through satellites beyond the boundary of the atmosphere and may dive in transoceanic fiber-optic links buried beneath the sea’s floor. It could be carried as electric pulses, flashes of laser or radio wave undulations. No matter how radical these transformations are, they represent no change to the abstract nature of the packet, the string of bits bundled in its header and body.

On the other hand, passing through the PFE of each router along the path, actual changes to the packet will take place. Let’s now follow the packet (a non-control packet!) in its journey through the router:

1- A packet is received on an ingress physical interface (built on or pluggable module). This is typically associated with the conversion from optical signaling to electrical.

2- The packet transferred to the PFE through the PFE's WAN side.

3- The packet is stored in a Memory Block (buffer) in the PFE.

4- Layer 2 (Link Layer) frame encapsulation is processed. This involves error checking, identifying the encapsulated protocol (whether the packet is IPv4, IPv6 or MPLS) and stripping out the layer 2 headers.

5- The packet is typically chunked into cells.

6- The packet’s header (sometimes called HEAD) is sent to the Lookup Block (typically in the form of a Notification Cell), the essence of the PFE, responsible for mapping the destination address in the packet to an egress interface by looking up the address (or the label, if MPLS is used) in the forwarding table. The Lookup Block also determines the destination MAC address it should have upon leaving the router. The Lookup Block is also responsible for identifying the logical interface (ifl) the packet belongs to and applying any of the services we talked about in the previous section such as network address translation, policing and the like.

Note that an ifl is a virtual construct with no physical manifestations. Identifying a packet as belonging to an ifl, means that this packet will be processed according to the parameters associated with that ifl (such as multi-field classification or filtering). In a way, the ifl is a packet processing profile. For the outside world, only physical interfaces (ifd's) are real. Determining the ifl the packet belongs to is based on some demultiplexing field, typically the VLAN ID.

7- The lookup may result in multiple valid next hops (Equal Cost Multi Path or ECMP interfaces). In such a case, a single egress interface is selected based on a hashing value computed from the fields in the packet (called hashing keys), ingress Interface and other parameters. Using a hash ensures that packets belonging to the same flow follow the same path and thus avoid being reordered.

8- The TTL is decremented and the checksum field is recomputed (if the packet is an IPv6 packet, then the Hop Count is decremented and there is no checksum field).

Note that the egress interface determined by the lookup could be in the same PFE, same linecard but on a different PFE, or in different linecards altogether.

9- If the egress interfaces is on the same PFE, then it is sent to it directly where it gets encapsulated in a layer 2 frame and punted into the link. If the egress interfaces is on another line card, then the packet is transmitted on the fabric to that other linecard where another lookup takes place.

The above simplified rundown assumes that a route exists for the packet, that the frame is not corrupted, the TTL is larger than 1 and that no services other than unicast forwarding are required (no multicast, no sampling, no classification, no rate limiting, no filtering and no address translation). It also implies san Ethernet frame.

PART THREE: CONCRETE VIEW

I. Engineering the PFE

The first routers, those that appeared in 1980's, were software-based routers. Control and forwarding were handled by a general-purpose processor (an off-the-shelf CPU). Juniper Networks pioneered the current architecture which is based on a strict separation between the control plane and the forwarding plane. Nowadays, a PFE is a specialized piece of silicon-ware implemented as an Application Specific Integrated circuit (ASIC), a set of ASICs or based on a specialized type of processor called an NPU (Network Processor Unit). An NPU can be thought of as a programmable PFE that has some fundamental forwarding primitives burned-in (built-in), while at the same time being programmable via what is known as microcode. Generally speaking, the more hardwired the design is, the higher the performance is but the less the flexibility in adding features is.

Processing a packet is in itself a simple task, even with services (such as NAT and multicast). What makes engineering a PFE challenging is that it has very little space (on the chip) to implement its functions and so little time to complete its processing. It has so little space because large chips are expensive and power consuming, yet the PFE is required to provide a myriad of services and to house large forwarding tables corresponding to hundreds of thousands of routes and label switching entries. The PFE has so little time since it has to be “wire speed”, in an era where multi-gigabits of traffic and millions of packets are expected per second. A PFE is designed to cope with a flood of packets arriving at the WAN side and demanding processing that is to be done in the shortest possible window of time.

Processing is to be done on an optimized and highly customized hardware as much as feasible. It must be done in one pass unless it can't be helped. Distributed processing within the PFE or among PFEs is to be always considered. Computations are to be done once and cached whenever possible.

In each succeeding generation, PFE designs grow in the packets per second and bits per second they can handle. Theses designs strive to consume less power and subsume more functions beyond pure forwarding. Moore’s law predicts (in a self-fulfilling manner) that the number of functions that could be implemented in a certain chip area grows almost exponentially with time. PFE designs take advantage of that by moving to smaller transistor sizes as they become economically feasible. This reduces power consumption and enables packing more transistors (and thus more functions and services) within a smaller area. The first Juniper Networks router, the M40, released in 1998, had a PFE that was based on 250 nanometer) nm CMOS transistors. The first Trio based MX routers, released in 2009, has a 65nm ASIC. The Juniper Networks JunosExpress PFE chip (which is used for the PTX converged Core router), to provide another example, utilizes 40nm transistor fabrication technology to host 3.55 billion transistors. Nowadays, it is possible to craft a PFE ASIC fabricated with sub 10nm transistors.

PFE chips may be engineered in-house or obtained from companies that specialize in PFE and Network Processor (a Network Processor is just a programmable PFE) design, such Broadcom and Mellanox Technologies, which licenses the NP-4 PFE processor. Sometimes a combination of both in-house and off the shelf components is employed. The first generation of MX routers (2007), had a PFE chip called i-chip for most of its functions, with an off-the-shelf chip called EZE chip for Ethernet layer 2 processing. Whether to go in-house or outsource the PFE engineering depends on cost, features and time to market considerations. An in-house PFE design imparts the router maker with more control and sharper clarity regarding the evolution roadmap of the PFE. It also enables the router maker to scale performance without forced software changes (at the microcode level) and loss of backward compatibility.

Linecards are the trays on which PFEs are served. A linecard is engineered to host one or more PFE complexes that are typically fixed on the linecard. Interfaces (ports) hosted on the linecard may be built-in or modular. Juniper Networks calls a card that carries interfaces, a Physical Interface Card (PIC). A linecard is called a PIC Concentrator (PC). Linecards for the early platforms were called Flexible PIC Concentrators (FPC). When the first generation of the MX platform appeared, its linecards were called Dense PIC Concentrators (DPCs). The linecards of the Trio-based second MX generation (called MX 3D), are called MPC (Modular PIC Card) since it can host up to two modular components called Modular Interface Cards or MICs. Note that FPC is still used to generically refer to a Juniper Networks router linecard. In all recent Juniper Routers, a linecard hosts one, two or a handful of PFE complexes. Utilizing multiple PFEs in a linecard is a way of reusing an existing PFE to scale the capacity of the PFE (scaling via scale-out rather than scale-up).

II. Evaluating the PFE

The forwarding plane is the ultimate determinant of a router’s performance, and the PFE is at its heart.

The throughput of a linecard (which is based on the number of PFE complexes it hosts and the throughput of each) in Gigabits per second is one main performance metric. This is crucial by itself but also determines the maximum interface speed of the ports hosted on it, for a linecard with a 40Gbps capacity, can’t, for example, host a 100Gbps Ethernet interface. Note that some linecards may be designed to allow for oversubscription, which means that they can host interfaces whose aggregate traffic is larger than what the card’s PFE can support. A card with a 40Gbps forwarding capacity that can accommodate eight 10G interfaces (whose maximum total is 80Gbps) is oversubscribed by 2:1. They may also vary in density (the number of interfaces of a certain type they can support). The number of logical interfaces supported on each physical interface is also a distinction between different PFE architectures.

Another performance metric is the packet per second the linecard is capable of handling. For linecards with the same Gbps throughput, this may be different since smaller packets (such as 64 byte packets) may be more demanding as they present more headers to process within each second. The datasheet throughput values are typically averages taken for unicast traffic with average packet sizes. Generally speaking, multicast throughput or throughput of IPv6 traffic may be less.

Multicast performance depends on how much fanout (output interfaces) is required in a network and how efficiently replication is done. Ingress replication, where the ingress PFE does all the copying, is the crudest multicast replication method and the least scalable. Modern PFEs employ clever schemes (some patented) to distribute replication among PFEs and achieve higher fanout, higher throughput and better latency.

When it comes to MPLS, linecards can differ in how deep a label stack each can handle and how deeply the PFE can inspect packets for the purpose of load balancing. With or without MPLS, the number of packet fields, called “keys”, the PFE can take into consideration for load balancing hashing, is a major differentiator between PFEs.

Power Efficiency in terms of Watts per Gigabit per second, called the “Efficiency Metric” or the Energy Consumption Rating (ECR), is also a major differentiator between PFEs. This metric, originally proposed by Juniper Networks and the Lawrence Berkeley National Laboratory, measures the amount of energy required to transport 1Gbps across the PFE and is to be determined with the PFE under test handling the full line capacity. Power efficiency depends on the architecture of the PFE, how smart power management is done, and the fabrication process used (how tiny the transistors in the PFE ASICs are), as mentioned in the Engineering section.

Obviously, the capacity of FIB in the Lookup engine to hold forwarding entries is a major differentiator between PFEs. Core routers are expected to be able to store hundreds of thousands to a couple of millions of forwarding entries (such as those for IPv4 routes, IPv6 routes and MPLS label switching state). PFEs will also differ in the amount of multicast state they can store (groups or source-group pairs and their associated input and output interfaces among other information). In addition, each PFE platform will have a certain capacity pertaining to each supported forwarding service: the number of NAT mappings supported and the number of filtering rules that can be applied to an interface will depend on the PFE design.

An area that is highly forwarding plane dependent is CoS. Unlike routing and multicast, CoS is an area that is solely dependent on the PFE with no routing plane involvement (except for configuration). PFEs vary in the number of queues supported (the number of different traffic classes each can support), the space allocatable for each class and how sophisticated the scheduler is. The scheduler is a complex piece of siliconware that is at the heart of the CoS function. It is responsible for dequeuing packet waiting for transmission. It must be capable of allocating bandwidth for each class and of providing priority to some classes compared to others. Advanced CoS features include the ability to provide another level of scheduling within each class (Hierarchical or Dense Queueing).

III. Folklore and Naming Galore

The word "PFE" is sometimes used to refer to the chipset, sometimes to all PFE complexes on a certain linecard and sometimes (very loosely) to the entire forwarding plane, which typically includes more than a PFE (two for unicast, more for multicast) in addition to the fabric.

Generally speaking, a PFE architecture will be given a name (like Trio, the chipset designed for the Juniper Networks Second Generation, or 3D, MX routers) and then each specific PFE chipset derived from that architecture will be given a name as well. The first generation Trio chipset, for example, was called “Trinity” (80Gbps), while the second generation was dubbed “Cassis” (130Gbps). To furnish another example, the efficiency-focused PFE chipset architecture named JunosExpress, was designed for the PTX converged core router. The first incarnation of that architecture was a chipset called “Broadway”, which was followed by chipset “Paradise”.

Linecards also have names associated with them. MPC cards, for example, have names depending on the particular PFE chipset they house and the number of such chipsets on the card. Agent Smith, one of the most popular Juniper Networks MPC cards, is a linecard that hosts four Trio PFE Complexes and offers 16 interfaces of 10GE. Centaur (a mythical creature that is half horse and half man) is assigned to a (DPC) linecard that is half 1G (20 ports 1G) and half 10GE (two ports 10G). The MPC3 linecard, which supports 100Gbps line rate and is based on the Cassis chip mentioned above, is called Hyperion.

IV. Last Words

The Routing Engine (RE) is a world of abstraction, a world of leisure and abundance of resources. It is a software-created world. Its operation is based on protocols that conform mostly to open standards. It utilizes a general purpose processor. It is the compute element of the router and what makes the router a router. Most of the packets the RE receives are destined to it.

Unlike the C-code realm of the RE, the PFE is a realm fashioned from silicon. The PFE is a tiny world of raw reality, a world of tight time windows and scarce resources. It is a hardware constructed world. Its operation is based on proprietary chip designs that evolve at a high pace. It utilizes specialized hardware in the form of PLD (Programmable Logic Devices) devices, NPUs (Network Processing Units) or ASICs. It is what makes the router a forwarder. Most of the packets arriving at it are destined to somewhere else.

While, most of the control plane is based on open standards, PFE designs are closed and proprietary. Access to the PFE usually requires issuing esoteric, cryptic commands on an unfriendly user interface. It is generally frowned upon, requires caution, and is mostly used by the engineers of the advanced Technical Assistance Center.

The RE is the brain, the PFE is the brawn. The RE is a mapper, while the PFE is a mass transit system. All the protocols, including routing, signaling and even SDN protocols, with their abstractions, finite state machines and fancy data structures are there to load the PFE with the finished product of raw, plain, forwarding state. The hype, the magic, is in the control plane. The secrecy, the mystery, is in the forwarding plane.

"The Routing Engine was made on account of the Forwarding Engine, and not the Routing on account of the Routing Engine". Routing is a means to an end, but Forwarding is the ultimate goal. No complete mastery of network design, configuration or troubleshooting is possible without a solid grounding in both.

APPENDIX

I. Memory

Memory is used for two purposes in a PFE:

- Packet Storage: Packets arriving through an interface need to be stored in a buffer before processing and transmitting them. This memory needs to be of high density in order to cost-effectively hold packets. The memory that temporarily hosts packets before their fate is decided is part of the packet buffer module of the PFE.

- Forwarding Table Storage: The PFE needs to store a table of destinations and their corresponding next hop information (outgoing interface, destination MAC address among other parameters). This memory needs to allow for low latency lookups. The memory that stores the Forwarding Table (FIB or Forwarding Information Base) is part of the lookup (route) module of the PFE.

Memory, especially lookup memory, is a major contributor to the cost, speed and power consumption of the PFE.

There are two main approaches to implement the routing lookup memory:

1. Ternary Content Addressable Memory (TCAM): One approach is to rely on a specialty memory type that is tailor designed for near instant lookups. A TCAM is designed so that inputting a destination address will, in a flash, return the corresponding next hop information without searching or iterations. TCAM provides extremely fast lookups but is complex, has high power consumption and takes up a large area on the chip.

2.Trie on RAM: Another approach is to utilize a fast RAM variant such as SRAM or, more recently, RLDRAM, with a binary tree structure created on it to store the destinations and the corresponding next hop information. The binary tree used for that purpose is called a Trie. Junos calls it a jtree or a ktree. A vast body of literature and proprietary schemes have been develop d to provide algorithms for optimized search on tries.

When the time a memory technology takes to access a piece of data depends on the location of the data, it is called sequential. When the access time is the same for all data, the memory is called Random Access Memory (RAM). Apart from mass storage, all memory utilized in routers (and most electronic devices in general) is a RAM variant. Broadly speaking, RAM can be divided into two types: Dynamic RAM (DRAM) and Static RAM (SRAM). DRAM is the commodity type that has lower performance but is cheaper and consumes less power. SRAM is more expensive but has higher performance. High performance DRAM variants include SDRAM (Synchronous DRAM) and Double Data Rate DRAM (known as DDR). A DRAM variant that is gaining popularity in router design is RLDRAM (Reduced Latency DRAM), which is midway between DRAM and SRAM. It is semi-commodity, moderately low latency and performs better than fast DRAM variants such as DDR. RLDRAM is an ideal choice for high speed packet buffering and inspections.

Normally a combination of SRAM, RLDRAM and TCAM are used within a PFE. TCAM is used for MAC address lookups and for cached route lookups. RLDRAM is used for FIB storage, while SRAM is used to store next hop information. DRAM is used for storing packets.

References

- Monge, Antonio Sanchez and Szarkowicz, Krzysztof Grzegorz, “MPLS in the SDN Era: Interoperable Scenarios to Make Network Scale to New Services”, O’Reilly Media.

- Wu, Weidong, “Packet Forwarding Technologies”, Auerbach Publications; 1 edition (December 17, 2007).

- Giladi, Ran, “Network Processors: Architecture, Programming, and Implementation (Systems on Silicon)”, 1st Edition, Morgan Kaufmann, 1st edition (July 30, 2008).

- Thomas, Thomas, Pavlichek, Doris E., Dwyer, Lawrence H., Chowbay, Rajah and Downing, Wayne W. “Juniper Networks Reference Guide: JUNOS Routing, Configuration, and Architecture”, Addison-Wesley

- Hanks, Richard Douglas and Reynolds, Harry “Juniper MX Series”, O’Reilly Media

- http://www.juniper.com/

Acknowledgment: I am very grateful for Ranpreet Singh, Senior Engineer at Juniper Networks, who volunteered precious time to review my article and provided valuable corrections, clarifications and suggestions. I’d be immensely grateful for any comments, corrections or suggestions to expand the article, from the community of readers.

Disclaimer: The information presented in this article is to provide conceptual understanding. It is not intended to be used for product selection, capacity planning or operational considerations. Neither Juniper Networks, my current employer is responsible for the accuracy of the presented information.